RDFaS

A cluster based RDFa spider.

The RDFa Web Spider (RDFaS) was my 3rd year dissertation project.

This page was migrated from rdfas.com

Abstract (from the final report)

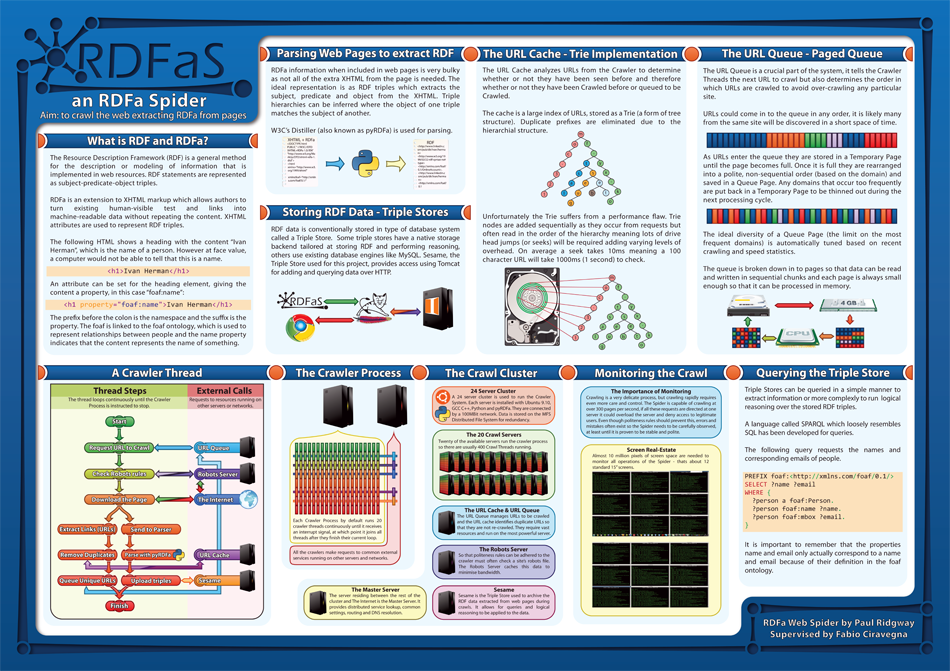

Current web 'standards' formalize formatting and provision of information on the Web, but little of this information can be put into context by a machine without heavy analysis. A proposed XHTML extension called RDFa allows the content creator to specify the type of data on a web page which implies or specifies the context and relationship of this data. This allows automated processes to potentially discern the meaning of the information. There are many search engines for several different types of media, but most commonly they allow the user to search content on the Web, return results based on a relevance match which is often done by the frequency in which the search term appears in the document. The aim of this project is to index pages which contain RDFa data for searching, tackling issues involved with and providing more research crawling and indexing large numbers of pages and enormous amounts of data.

Documentation

I was required to create two reports and a poster for this project. Below a download link for each, in PDF format, can be found below:

Survey & Analysis | Final Report | Poster

A Side Project

Over the summer I worked for the OAK group at the University of Sheffield. During that time Matthew Rowe and I developed the underlying framework for a person search system built on Hadoop and other technologies.